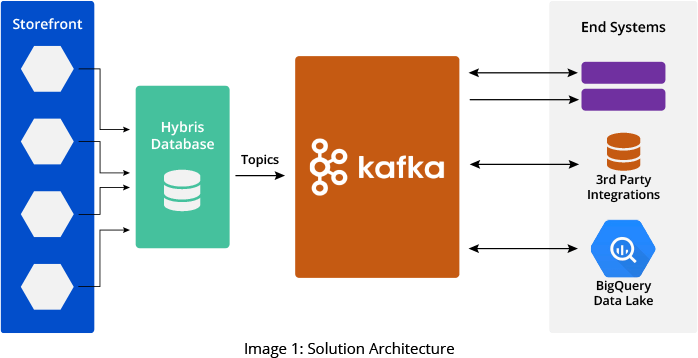

Our IA team developed a solution that sends data from the Hybris database to different third parties for reports and further processing. To achieve this, we developed a series of Kafka producers written in Java that produce data from Hybris and store them in Kafka topics. Then, we developed a set of Java-based Kafka Consumers that poll the topics and send data to various end systems.

Our IA team designed over 40 Kafka topics on Confluent Kafka brokers to manage various aspects of the business - customer enrollments, customer updates, orders, products, event registration, and many customer KPIs.

Additionally, we developed a matching set of Kafka consumers, also written in Java, to read the associated topic streams. The 40+ Kafka consumers were deployed on a Kubernetes engine in GCP. Our team configured multiple GCP pods for each consumer, providing scalability and high availability.

Failed data is stored in a catch-all Kafka topic called Dead Letter Queue (DLQ) to avoid data loss. End system consumers read the DLQs in addition to their designated topics. If necessary, the consumers are programmed to DLQ technical failure messages for automatically resyncing the data to consumer applications and other endpoints. The DLQ messages that pertain to business failures are sent back to the e-commerce applications for reprocessing.

Our team used Kafka analytics monitoring APIs - and Prometheus to pull KPIs such as consumer lags, active connections, and traffic volume into a handler application to ensure consistent performance. We also set performance thresholds and configured alerts. Our team used Splunk to assist with debugging logs.

Key takeaways from the solution include:

- Shift to a cloud-based streaming infrastructure: Our team assisted the company in moving away from a REST API-based on-premises system to a modern streaming cloud-based infrastructure.

- No data loss:The new system can easily handle a massive amount of company data with no loss. Another unique feature of our solution captures and reprocesses any failed data in the catch-all Kafka DLQ topic.

- Zero downtime: The new system features multiple redundant cloud-based virtual machines that ensure zero downtime.