A software system consists of many jobs and DAGs (Directed Acyclic Graphs) scheduled to run at regular intervals, processing different data types in a massive volume. In such a system, monitoring the jobs becomes crucial to maintaining data integrity. Production engineers have to spend hours monitoring such jobs. They may be late in taking necessary actions if any jobs have failed. In such a scenario, Alerts come to the rescue.

On any given day, dozens of alerts require an engineer’s attention. It is important to sift through the list and attend to high-priority alerts. The Subscription-Based Alerts module is a custom module developed using Python that allows users to subscribe to an alert specific to a job or interface. Specific alerts solve the problem where you spend hours monitoring jobs and get hundreds of alerts, only to find that most are insignificant.

Subscription-based Alerts

The Alerts engine delivers data health and pipeline health notifications in several ways. It can be delivered through various channels such as email, SMS, or Slack.

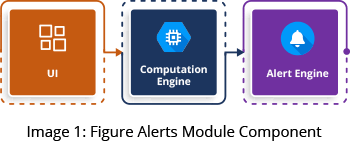

The Alerts module consists of three components (Image 1):

- Alerts UI (user interface)

- Computation Engine

- Alert Engine

Alerts UI

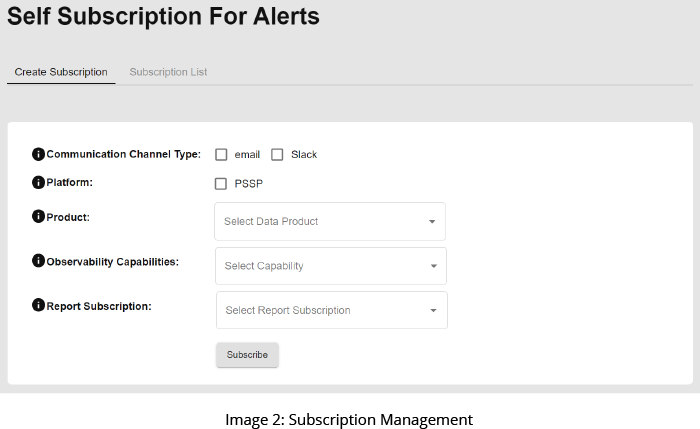

The Alerts UI allows you to subscribe and manage subscriptions to various alerts. During the subscription process, you can opt for any or all of the following alert notification channels:

- Slack

- SMS

Similarly, you can also select various platforms, interfaces, capabilities, and reports for which you wish to receive notifications.

Adding new platforms or interfaces is a one-time activity to be done as part of the initial setup. The newly added platforms and interfaces will be visible in the UI for the users to subscribe.

Once users subscribe, they start getting notifications whenever the subscribed event occurs.

Subscription Management

Sometimes, situations may arise when the user wants to disable alerts temporarily or opt-out of the subscription permanently. To address such scenarios, the following user options are provided:

- Unsubscribe: Once a user unsubscribes, they will stop receiving alerts.

- Pause/Resume: This disables the alerts temporarily, which the users may resume later.

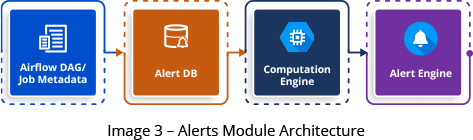

Computation Engine

The computation engine continually scans new event occurrences eligible to be sent as alerts since its last run. Examples of eligible events could include job failures, SLA misses, and success.

The computation engine looks for all the reports with an active subscription and runs the rules you configure to generate alert data. It dynamically creates report queries based on the configured rules. Alerts are generated as the engine then executes the query. The alert engine subsequently sends notifications to subscribers.

Alert Engine

It defines a different template for each alert. It sends notifications to subscribers on their preferred channels. Data sent by the computation engine includes alerts and user subscription data.

Rules

The rules give complete details about the query to the computation engine. For example, they describe the report type (e.g., ‘FAILURE’ or ‘SLA’) depending on which alert template is chosen. Rules can also direct the computation engine, which records to consider during evaluation and whether to send an alert. All capabilities are assigned a rule to evaluate only the records updated within the last 12 hours. This avoids unnecessarily picking old records, which may slow down alert engine performance. This also helps in preventing repetitive alerts from being sent to the users.

Depending on the requirement, you must manually configure rules for any new reports you plan to add. Alternatively, let the alerts engine development team configure the rules - to add the rules on the backend. This allows the computation engine to form a query dynamically based on the established rules.

Further, you can configure different rules for additional reports. The rules engine then evaluates alerts in various scenarios depending on your requirements.

Here is a sample rule that shows the latest executions (last 12 hours):

Rule Name: FAIL

RULE: Select interface_id, geography_id, dag_id with status = ‘FAIL’

Capabilities

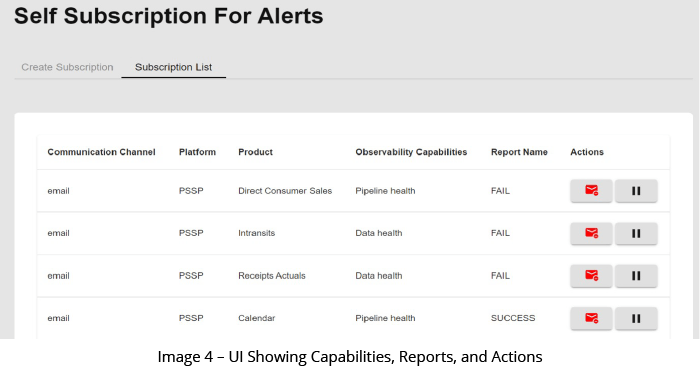

The Alerts Module delivers alerts in two different capability domains:

- Pipeline Health

- Data Health

Depending on your requirements, you can manually add more capabilities in the backend.

Pipeline Health

Pipeline Health refers to data flow health from the source across all data layers and into the final layer. The DAGs/Jobs scheduled in production systems can be in any one of the following states:

- Started/Running

- Success

- Failed

- Skipped

Currently, alerts are configured for these three scenarios:

- Success: Users can subscribe to this report if they want to get notified when a job completes successfully.

- Fail: Users who subscribe to this report are notified when a job fails.

- SLA Miss: Users can subscribe to this report if they want notification when the job fails to complete within the stipulated time.

Data Health

Data health depicts the quality and freshness of data transmitted across the various data layers. Factors used to measure data health can include several metrics, including an overall count of records transmitted, null or blank data, and data duplication.

The following data health reports are currently enabled:

- Data Health Fail Report: Users can subscribe to this alert to know if there are failures in any of the data quality checks.

- Data Health Execution Fail Report: This alert notification is sent when the job which executes the data quality check fails and is mainly oriented toward technical staff.

We have currently integrated the Alerts Module with BEAT.

BEAT is GSPANN’s innovative proprietary ETL and data testing framework that automates data quality, audits, and profiling.

Conclusion

Alert notifications are sent to subscribers in a near real-time fashion, which helps in effective monitoring and taking prompt and appropriate action. Production engineers need not waste time spending countless hours monitoring jobs in progress. The Alert module makes their job easier by notifying them of subscribed events.